In software testing, test plans and test cases are both essential, but they serve very different purposes. A test plan maps out the big picture, what you're testing, why, and how, while a test case focuses on the specific steps needed to validate individual features. Mixing them up can lead to confusion, wasted effort, and gaps in test coverage.

This guide will walk you through the key differences between these two documents, their components, and practical examples to help you use each one effectively.

What Is a Test Plan?

A test plan is a high-level document that outlines the overall testing strategy for a project or release. It defines the scope of testing, the approach the team will take, the resources involved, and the timeline for execution. The purpose of a test plan is to guide the entire QA process from start to finish, making sure everyone on the team understands the scope, objectives, and responsibilities before any actual testing begins.

A well-written test plan keeps the QA team aligned with project goals. It acts as a roadmap in your test case management that helps the teams avoid scope creep and manage risk. A test plan helps ensure that no critical functionality gets overlooked during the testing cycle.

What Does a Test Plan Include?

A test plan documents the key information needed to execute testing effectively. It covers the testing scope, approach, team responsibilities, and potential risks. Each component serves a specific purpose in keeping the QA process organized and focused.

Scope

The scope defines which features, modules, and functionalities are included in the testing effort and which are excluded from the current cycle. It sets clear boundaries to keep the team focused and prevents confusion about priorities.

Objectives

Objectives state the specific goals the testing effort aims to achieve. This includes testing core functionality, verifying bug fixes, and confirming that the software meets defined quality standards. Clear objectives help the team prioritize and measure whether testing was successful.

Test Strategy

The test strategy explains the overall approach to testing the software. It covers the types of testing that will be performed (functional, regression, performance, or security), whether tests will be manual or automated, and how execution will be handled across different environments.

Resources

Resources identify the team members involved in testing and the tools required for execution. These include QA engineers, test environments, automation frameworks, and any third-party tools that might be needed to support the effort. Documentation of resources helps with proper resource allocation and surfaces any gaps before testing.

Environment Details

Environment details specify the testing infrastructure, including hardware, operating systems, browsers, databases, and network configurations. These details confirm that tests run in conditions that closely match production, leading to more accurate results and fewer issues after release.

Schedule

The schedule outlines the timeline for testing, including start and end dates, milestones, and deadlines for different test phases. A realistic schedule gives the team enough time to test thoroughly and provides stakeholders with visibility into when testing will be complete.

Risk Management

Risk management identifies potential issues that could impact testing or product quality. This might include tight deadlines, limited resources, or unstable areas of the application. Identifying risks early enables the team to plan effective mitigation strategies and prioritize critical areas for additional coverage.

Best Practices to Create a Test Plan

A strong test plan provides clear direction without unnecessary complexity. It doesn't have to be lengthy or overly detailed; it just needs to be clear and actionable. Here are the key practices that keep test plans effective and relevant.

Keep the Test Plan Concise

Focus on essential information that guides execution and decision making, including scope, strategy, resources, timelines, and risks. Long test plans are rarely read or maintained, defeating the purpose of a test plan. Keep the plan concise so it stays relevant and gets referenced throughout the testing cycle.

Align the Test Plan with Requirements

The test plan should clearly include project requirements and acceptance criteria. Review user stories, specifications, and business goals to confirm that your testing scope covers the right functionality. Misalignment leads to testing the wrong features or missing critical areas. Regular alignment with product managers and developers keeps the plan grounded in actual project needs.

Identify Risks Early

Identify potential problems before testing begins so the team can prepare accordingly. Common risks include tight deadlines, complex integrations, external dependencies, or unstable features. Calling out risks allows the team to allocate extra coverage, adjust timelines, and prepare backup plans.

Keep the Test Plan Flexible

Focus on high-level strategy. Instead of including rigid details, build flexibility into the test plan. Treat the test plan as a living document that gets updated as requirements, priorities, or lessons learned change during testing. A flexible plan adapts to change and stays useful throughout the release cycle.

What Is a Test Case?

A test case is a set of conditions, steps, and expected results used to validate that a specific feature works correctly. It provides clear instructions that testers follow to check whether the software produces the expected result. Test cases are designed to be repeatable so any team member can execute them consistently. Their purpose is to verify functionality, catch defects, and provide a clear record of test execution and outcomes.

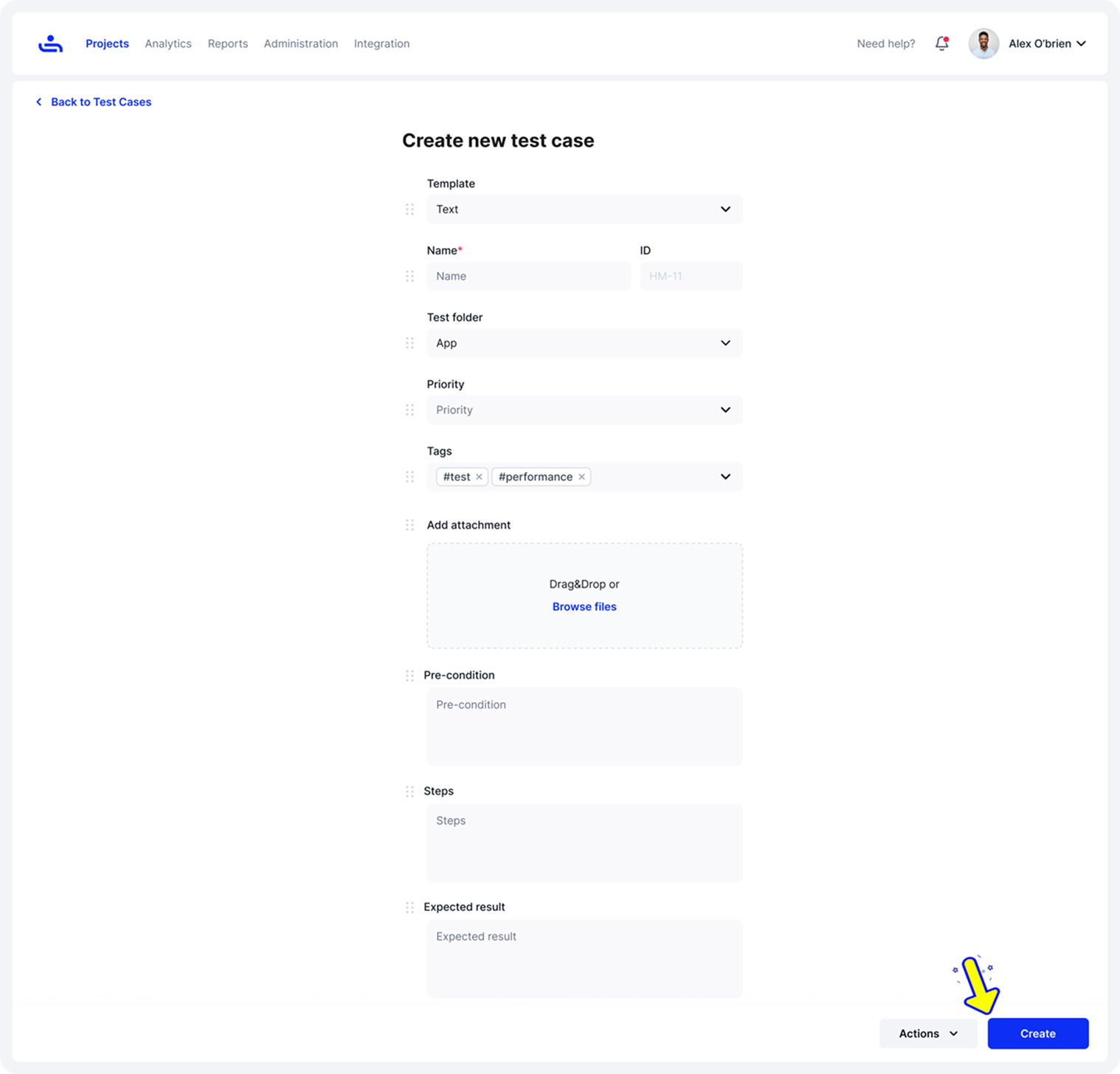

What Does a Test Case Include?

A well-structured test case includes key elements that make it easy to execute, understand, and track. Each component serves a specific purpose, and documenting them consistently helps keep the QA process organized. This ensures that any team member can run the tests with clarity and without confusion.

Test Case ID

The test case ID is a unique identifier assigned to each test case. It helps teams organize, reference, and track tests in large suites. A clear ID structure makes it easy to locate specific tests, link them to requirements, and report results.

Test Title

The test title provides a clear description of what the test validates. A good title is specific and action-oriented, making the test's purpose immediately obvious. For example, "Verify login with valid credentials" is better than "Login test" because it states exactly what's being checked. Clear titles make test suites easier to navigate and help teams find relevant tests quickly.

Preconditions

Preconditions define the setup required before executing the test. This includes user permissions, system states, required data, or specific configurations. Documenting preconditions prevents test failures caused by improper setup and maintains consistent results across test runs.

Test Steps

Test steps are the specific actions a tester performs to execute the test. Each step should be clear, sequential, and easy to follow without prior context. Steps focus on user actions rather than technical details, making them easier to understand and maintain.

Expected Results

Expected results define what should happen when the test steps are executed correctly. They provide the benchmark for pass or fail decisions. Each expected result should be specific and measurable. Clear expected results make it easy to identify defects during execution.

Test Data

Test data includes the specific inputs and values used during execution. This might include usernames, passwords, sample files, or database records. Documenting test data ensures tests can be repeated accurately and helps testers prepare their environment.

Best Practices to Create a Test Case

Writing effective test cases requires clarity, focus, and consistency. A well-written test case should be easy to understand, simple to execute, and provide clear pass or fail criteria. Following proven practices helps teams create test cases that improve coverage, reduce execution time, and make maintenance easier as the software evolves.

Write Clear and Specific Steps

Each test step should describe a single action in simple, direct language. Clear steps eliminate confusion during execution and ensure different testers get the same results. The goal is for anyone on the team to execute the test without needing additional context or clarification.

Keep One Objective Per Test Case

Each test case should validate a single functionality or scenario. Testing multiple objectives in one case makes it harder to identify what failed when a test doesn't pass. Keeping tests separate also makes it easier to track coverage and rerun specific scenarios without running extra, unrelated steps.

Use Reusable Components for Common Steps

Many test cases share common actions like logging in, navigating to a page, or setting up data. Creating reusable steps or components for these repeated actions saves time and reduces duplication. When a shared step needs updating, you only change it once instead of editing dozens of individual test cases.

Define Clear Expected Results

Expected results should be specific and measurable, not subjective statements. Clear expected results eliminate guesswork and make it easy to determine pass or fail during execution. They also help catch edge cases where the software technically works but doesn't meet actual requirements.

Review and Update Test Cases Regularly

Test cases become outdated as features change, bugs get fixed, and new functionality gets added. Schedule regular reviews to remove obsolete tests, update steps that no longer match the current software, and add coverage for new scenarios.

Core Differences Between a Test Plan and a Test Case

While test plans and test cases are both critical to the QA process, they serve completely different purposes and operate at different levels of detail. A test plan provides the strategic direction for the entire testing effort, while test cases focus on validating specific functionality. Understanding these differences helps teams use each document effectively and avoid confusion about what information belongs where.

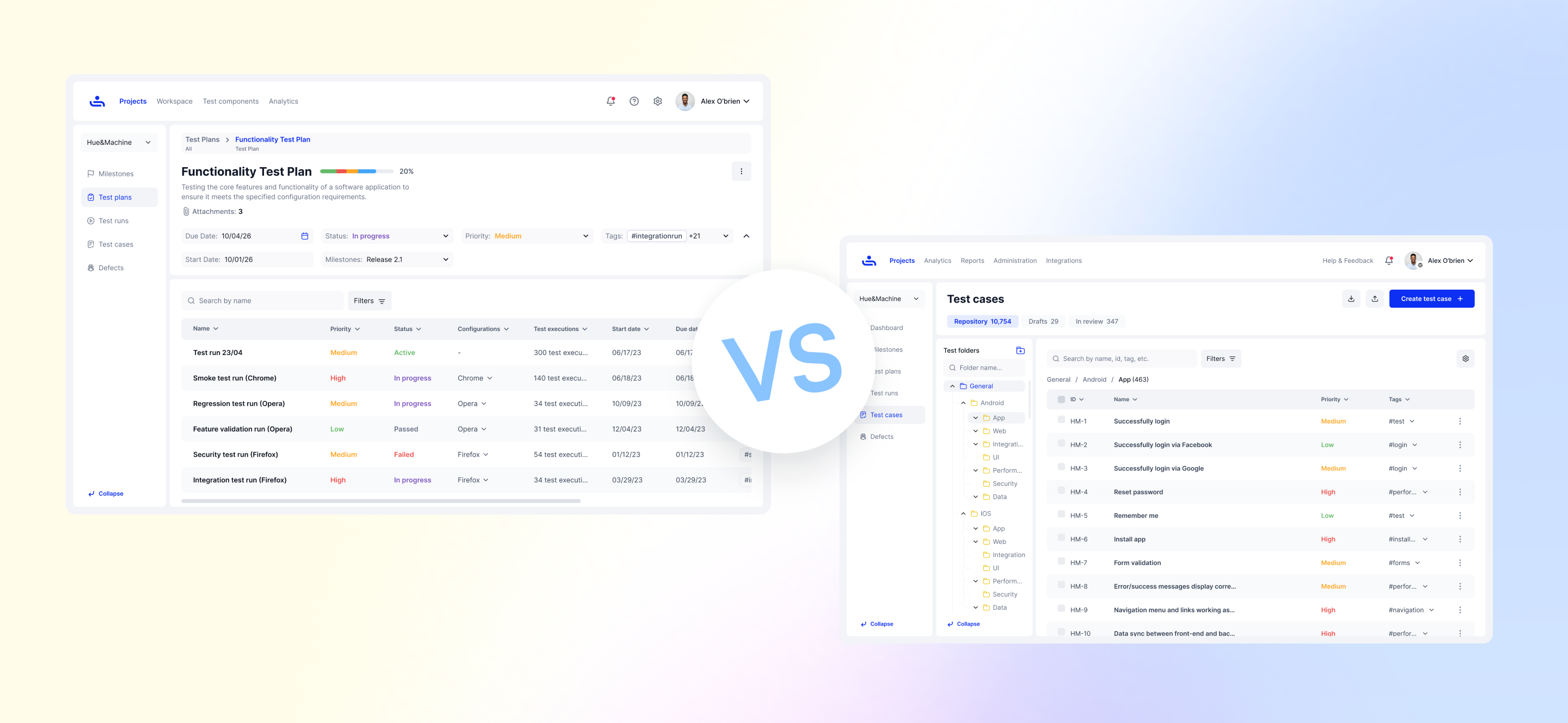

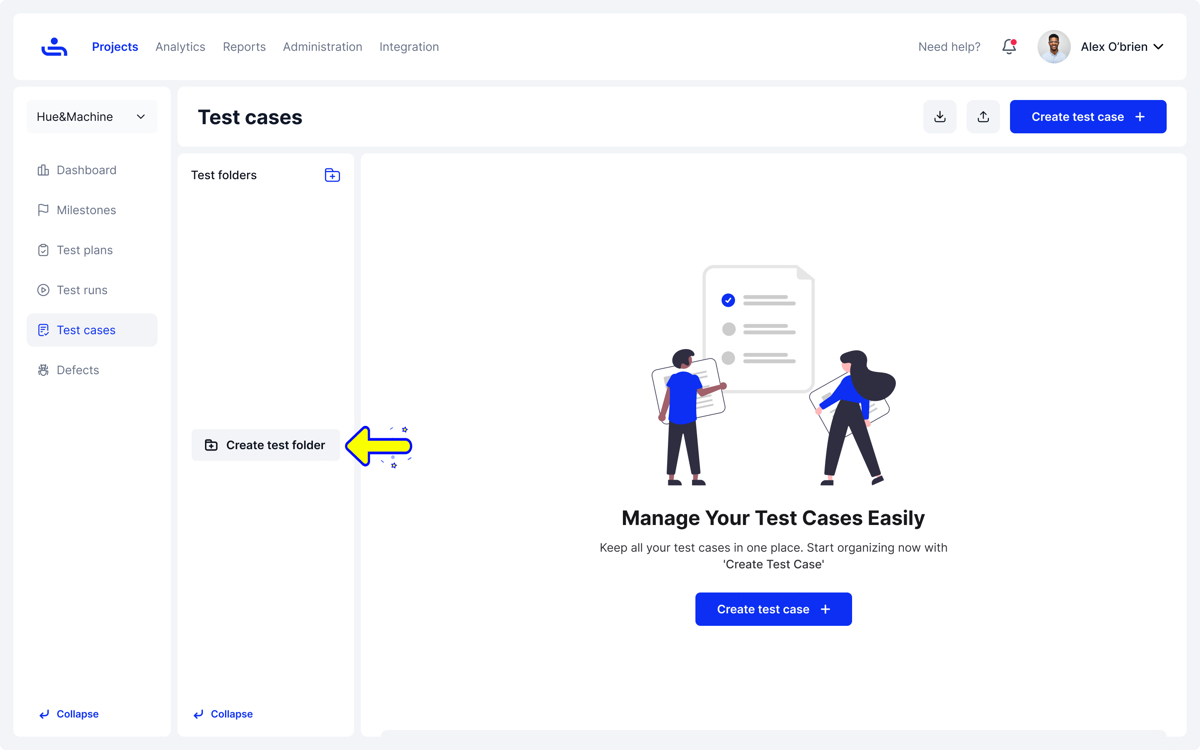

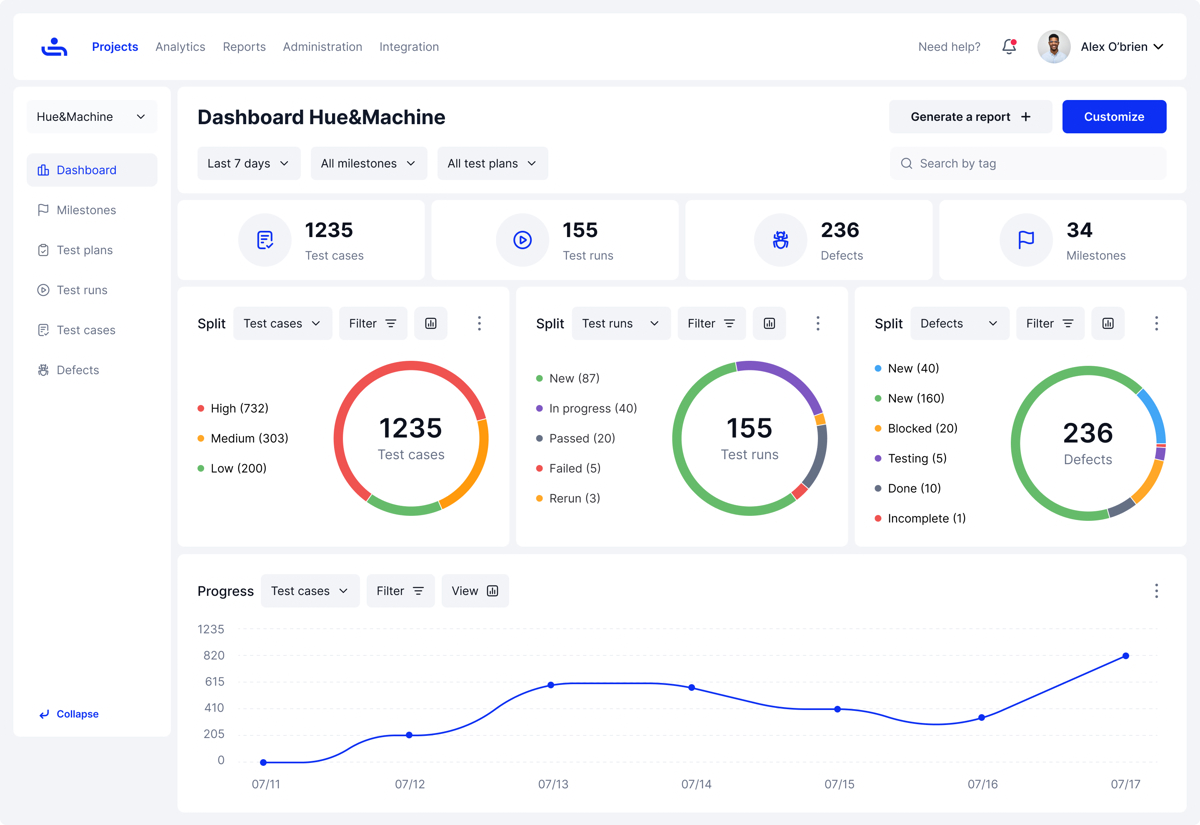

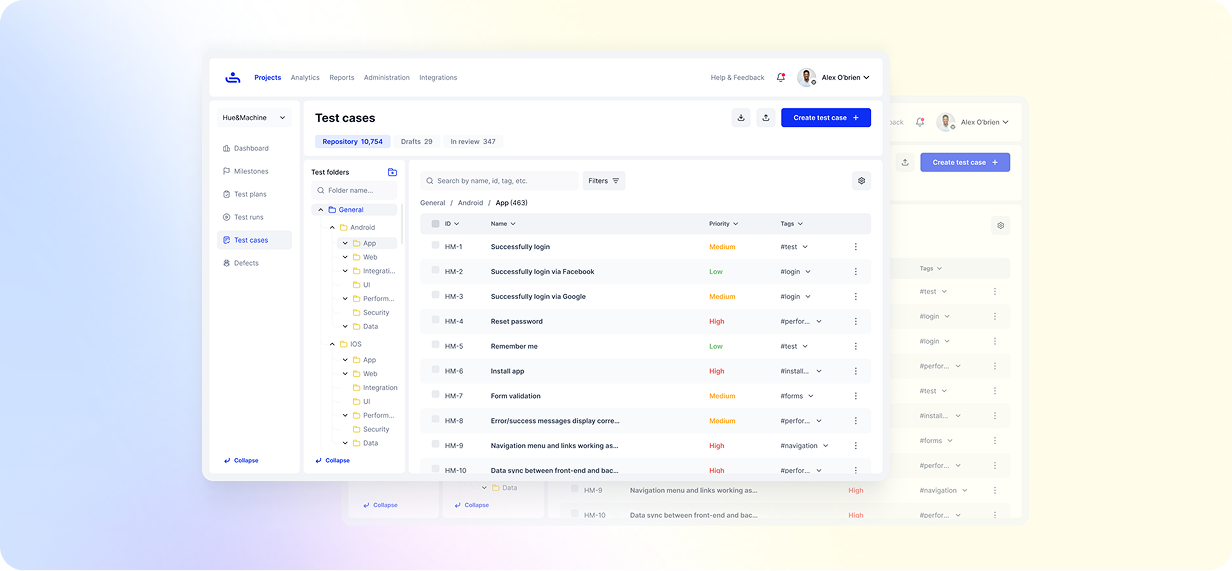

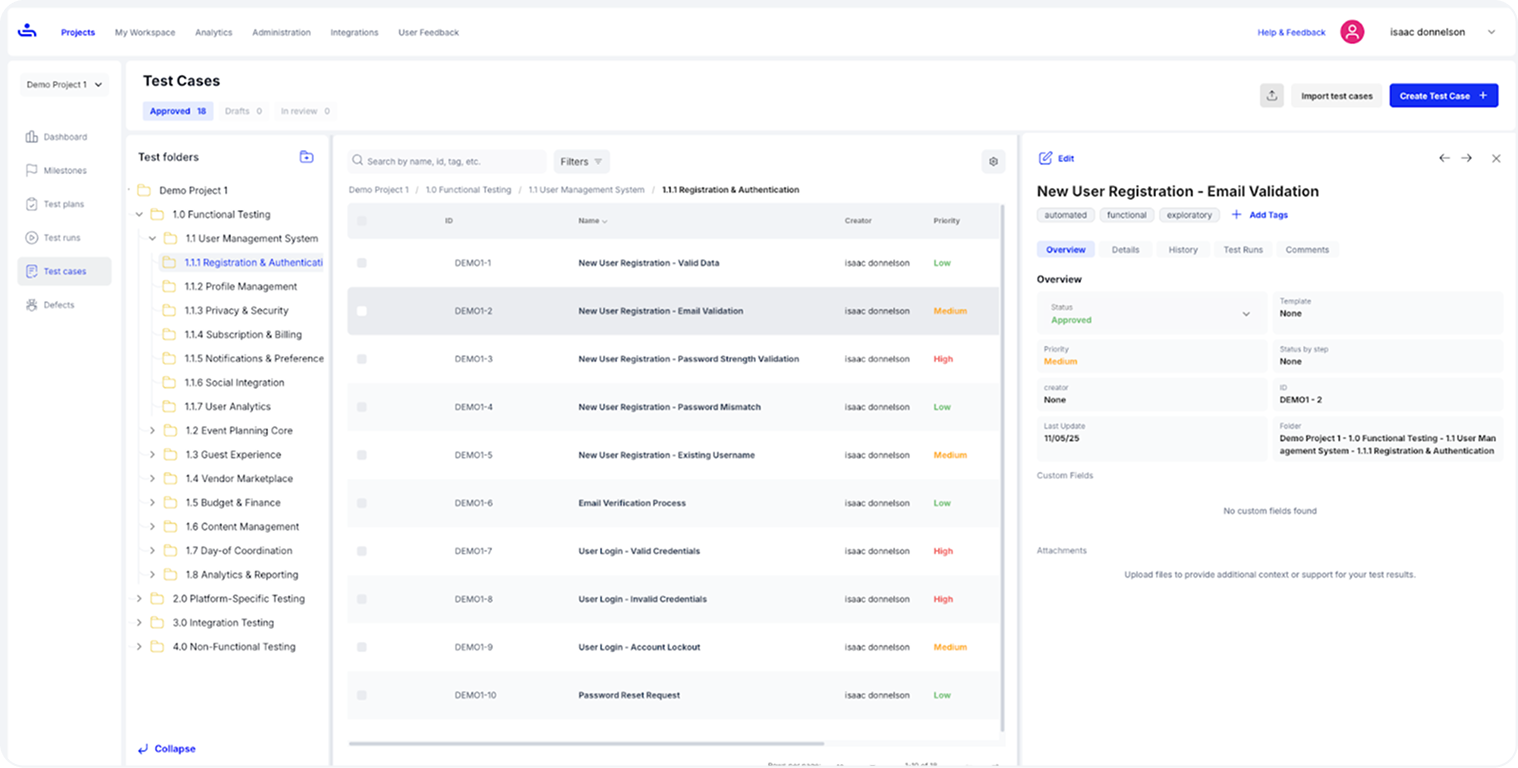

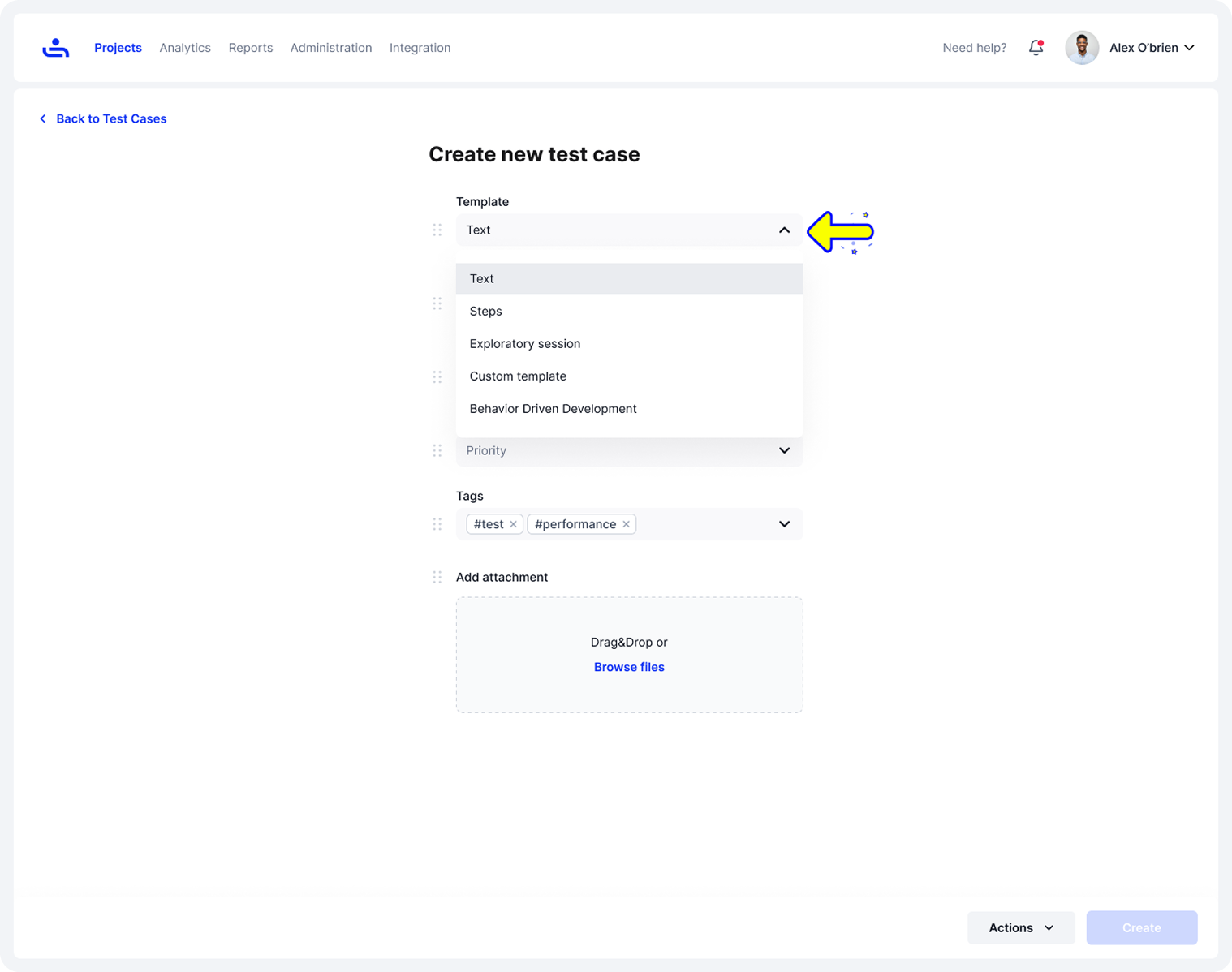

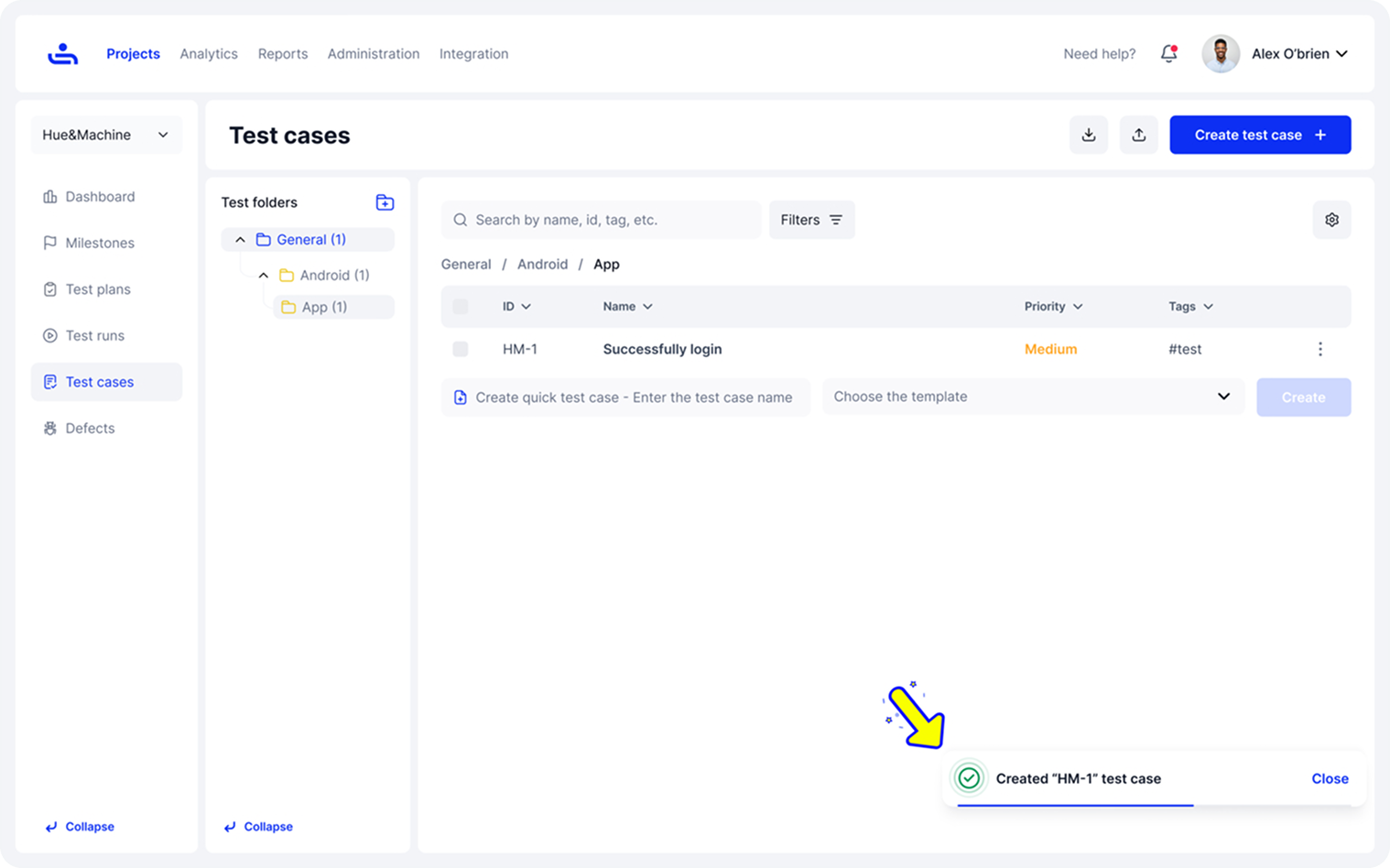

Managing Test Plans and Test Cases With TestFiesta Test Management Tool

The challenges outlined in this guide, keeping test plans aligned with changing requirements, avoiding duplicated test steps, and maintaining test cases as features evolve, become easier to manage with the right tool. TestFiesta addresses these pain points by supporting both test plans and test cases in a single flexible platform that adapts to how your team actually works.

- Shared steps for efficiency – Create reusable actions once, and when you update the shared step, those changes sync across all related test cases, reducing repetitive manual edits.

- Dynamic organization with tags – Categorize and filter tests by priority, test type, or custom criteria without being locked into static folder structures.

- Custom fields for project-specific needs – Add fields that matter to your workflow, from compliance requirements to environment details.

- Adaptable workflows – Build testing processes that match how your team actually works, not how a tool forces you to work.

Conclusion

Understanding the difference between test plans and test cases is fundamental to running an effective QA process. A test plan sets the strategic direction for your testing effort, while test cases validate that individual features work as expected. Using both documents correctly helps teams maintain clear test coverage, avoid wasted effort, and catch issues before they reach production. When your test plans stay aligned with project goals and your test cases remain focused and maintainable, testing becomes more efficient and reliable.

Ready to streamline how you manage both? Sign up for a free Testfiesta account and see how flexible test management makes a difference.

FAQs

What Is a Test Plan and Why Is It Important?

A test plan is a high-level document that outlines the testing strategy, scope, resources, and timeline for a project or release. It's important because it provides direction and alignment for the entire QA team before testing begins. Without a test plan, teams risk testing the wrong features, missing critical functionality, or wasting time on unclear priorities.

What Is the Difference Between Test Cases and Test Plans?

Test plans define the overall testing strategy and approach for a project, while test cases provide specific steps to validate individual features. A test plan focuses on the big picture, the scope, objectives, resources, timeline, and risks involved in the testing effort. Test cases focus on execution, the exact steps a tester follows, the expected results, and the data needed to verify specific functionality.

Who Uses Test Plans vs Test Cases?

Test plans are used by QA leads, project managers, stakeholders, and development teams to understand the overall testing strategy and align on scope and timelines. Test cases are used primarily by QA testers and engineers who execute the actual testing. While test plans provide direction for decision-makers, test cases provide the detailed instructions that testers follow during execution.

What Is the Difference Between a Test Plan and Test Design?

A test plan outlines the overall testing strategy, scope, and approach for a project, while test design focuses on how specific tests will be structured and what scenarios will be covered. Test design happens after the test plan is defined and involves identifying test conditions, creating test scenarios, and determining the test data needed.

Are Test Plans and Test Cases Both Used in a Single Project?

Yes, test plans and test cases are both used in a single project and complement each other throughout the testing process. The test plan is created first to establish the overall strategy and scope, and then test cases are written to execute that strategy.